Results: Should autonomous cars be allowed to speed?

In our previous reader poll, we asked you, dear readers, who should be allowed to ‘drive’ an autonomous car. The result was interesting: While the idea of kids riding in autonomous cars by themselves didn’t get a lot of support, the majority of the participants supported the idea of unlicensed seniors and legally blind persons to ride autonomous cars alone.

This curious result made us realize that there is something more interesting at play here.

Are we concerned about the question of who would be responsible should things go wrong? Clearly, when kids are behind the wheels, they are less likely to be held responsible for anything that goes wrong. So we decided to poke around this topic of responsibilities a little bit more.

Two weeks ago, we asked how strictly an autonomous car should follow the speed limit, and if it gets a ticket for speeding, then who should be held responsible?

How strictly should an autonomous car driving in a city follow the speed limit?

I don’t know about you, but members of the Open Roboethics initiative agree that people drive just a tad bit faster than the speed limit. Drivers (hopefully) know that speed limits are there for good reasons. But that doesn’t mean we should get speeding tickets every time we go 1km/h above the speed limit. There’s a certain window of speeds that are typically tolerated by traffic laws, and that makes our driving experience much less stressful.

[pullquote]33% of the participants said that autonomous cars should never go above the speed limit.[/pullquote] But when it comes to autonomous cars in city driving scenarios, it seems that people aren’t willing to give the cars that much luxury of going over the speed limits. In fact, 33% of the participants said that autonomous cars should never go above the speed limit, while 21% of the participants said that it should be allowed to deviate from the limit only slightly (up to 5km/h or 3mph). And 11% of the participants said that the car can deviate quite a bit (up to 20km/h or 12mph) during city driving.

Interestingly, 18% said that autonomous cars should not be subject to the same speed limit as human-driven cars. Does that mean the cars should have a higher speed limit, or lower? It’s impossible for us to find this out based on the poll results. But we could imagine autonomous cars having on-board speed limit algorithms that automatically determines safe driving speeds for different road conditions, environment, its calculated momentum (thanks Robohub for this idea) etc., regardless of the actual speed limit for the road. Maybe that would lead to more safe and efficient driving in general.

[pullquote] It’s safe to say that even when most of our participants are drivers, autonomous cars are expected to keep to the speed limit quite strictly. [/pullquote] Note that most of our participants for this poll know how to drive. About 42% of them drive a car at least once every day, 33% drive a car one to six times a week, and only 5% are not licensed to drive. We didn’t find much connection between how often people drive and how strictly people think autonomous cars should follow speed limits. But it’s safe to say that even when most of our participants are drivers, who probably have experiences driving above speed limits, autonomous cars are expected to keep to the speed limit quite strictly.

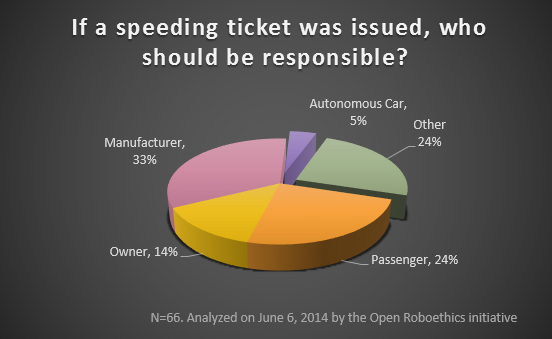

If a speeding ticket was issued, who should be responsible?

So let’s say that an autonomous car is designed to be able to go above the speed limit. While autonomously driving with an adult passenger on a highway, it goes above the speed limit. The passenger is not the owner of the car. If a speeding ticket was issued, who should be responsible for the violation of the traffic law?

Mind you, when we were coming up with the choice for this question, the members of the Open Roboethics initiative had a bit of a discussion. While one member was pretty sold on saying that the owner of the car should be responsible, another member found that to be odd.

[pullquote]While 33% said that the manufacturer should be responsible, 24% and 14% said that the passenger and the owner, respectively, should be responsible.[/pullquote] It seems that, when it comes to the responsibility question, people are quite torn about it. While 33% said that the manufacturer should be responsible, 24% and 14% said that the passenger and the owner, respectively, should be responsible. Somewhat unsurprisingly, only 5% said that the autonomous car should be responsible.

More interestingly 24% of the participants opted to choose ‘other’ and told us more conditional answer to this question, perhaps hinting at the complexity of figuring out responsibilities when it comes to things that autonomous machines do. Most people who gave us a conditional answer said that whoever has allowed the car to speed, perhaps by modifying one of the car’s operating settings, should be responsible, whether it be the owner, passenger, or the manufacturer should be responsible. Some have also said that autonomous cars should not be issued traffic tickets, period.

For now, it looks like people are willing to blame the manufacturer if anything, even violating speed limits, goes wrong.

The result of the poll presented in this post have been analyzed and written by AJung Moon, Camilla Bassani, Fausto Ferreira, and Shalaleh Rismani, at the Open Roboethics initiative.

Anonymous

July 4, 2014Being “autonomous” implies that the software is liable. The issue is product liability.